on

Resource limiting CPU and Memory in Kubernetes

In my previous post, we seen how to configure kubernetes cluster ,how to deploy pods and grow the cluster. Now in this post i am going to show how to resource limiting cpu and memory in a kubernetes deployment. We can also limit resource at namespace level, which will be covered in the later post.

I am going to use a special image vish/stress. This image has options for allocating cpu and memory, which can be parsed using an argument for doing the stress test.

My configuration for Master and Worker node is 4GB memory with 2 CPU cores each running in Virtualbox.

First download the vish/stress image and create the deployment. The image vish/stress provides option for creating stress in cpu and memory

vikki@drona-child-1:~$ kubectl run stress-test --image=vish/stress

deployment.apps "stress-test" createdWait till the pods status changes to running.

vikki@drona-child-1:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-768979984b-mmgbj 1/1 Running 6 65d

stress-test-7795ffcbb-r9mft 0/1 ContainerCreating 0 6s

vikki@drona-child-1:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-768979984b-mmgbj 1/1 Running 6 65d

stress-test-7795ffcbb-r9mft 1/1 Running 0 32sNow verify the logs from respective container image. In my case the container name for pod stress-test-7795ffcbb-r9mft is e8e43da13b23.

You can also use "kubernetes logs stress-test-7795ffcbb-r9mft"(but its not working in my server)

It shows allocating 0 memory. By default the stress image will not allocation any memory/cpu.

[root@drona-child-3 ~]# docker logs e8e43da13b23

I0819 13:01:00.200714 1 main.go:26] Allocating "0" memory, in "4Ki" chunks, with a 1ms sleep between allocations

I0819 13:01:00.200804 1 main.go:29] Allocated "0" memoryLimiting Memory and CPU for the pod

We are going to allocate more memory for this deployment and also going to set resource limit for CPU and Memory. Finally will monitor the deployment behaviour.

Export the yaml for the current deployment “stress-test” and add the resource limit option and Memory/CPU allocation option.

vikki@drona-child-1:~$ kubectl get deployment stress-test -o yaml > stress.test.yaml

vikki@drona-child-1:~$ vim stress.test.yamlNow in the above example i restricted cpu to 1 cores and memory to 4GB using limits options. Also i added the argument to allocate 2 cores and memory of 5050MB(~5GB).

The requests and limits option is analogous to the soft and hard limit in Linux.

My total memory available in the worker node is only 4 GB,I am over allocating memory just to check the behaviour. In real case you will be limiting the resources lesser than the available Memory/CPU, otherwise it makes no sense.

Now apply the new yaml to the deployment and wait for the pods to go running status.

vikki@drona-child-1:~$ kubectl replace -f stress.test.yaml

deployment.extensions "stress-test" replaced

vikki@drona-child-1:~$ vikki@drona-child-1:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-768979984b-mmgbj 1/1 Running 6 65d

stress-test-7795ffcbb-r9mft 0/1 ContainerCreating 0 8s

stress-test-78944d5478-wmmtc 0/1 Terminating 0 20m

vikki@drona-child-1:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-768979984b-mmgbj 1/1 Running 6 65d

stress-test-7795ffcbb-r9mft 1/1 Running 0 32s

vikki@drona-child-1:~$ Check the logs in the container. Its trying to allocate 5050MB memory and 2 CPU.

[root@drona-child-3 ~]# docker logs a9ebee58d3ca

I0819 13:36:36.618405 1 main.go:26] Allocating "5050Mi" memory, in "100Mi" chunks, with a 1s sleep between allocations

I0819 13:36:36.618655 1 main.go:39] Spawning a thread to consume CPU

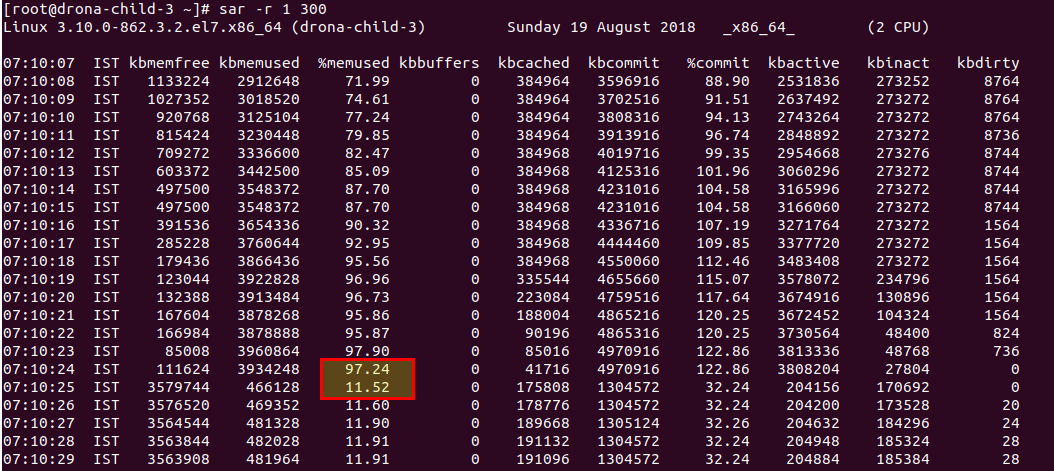

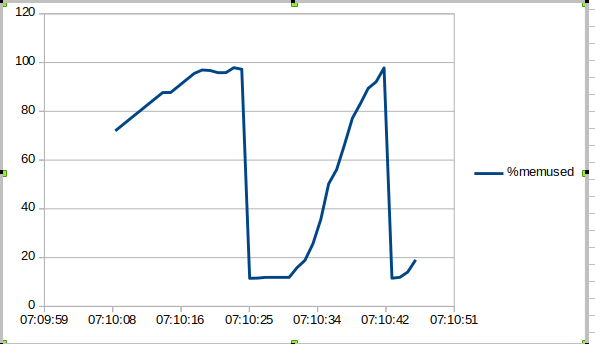

I0819 13:36:36.618674 1 main.go:39] Spawning a thread to consume CPUOpen a new terminal and monitor the Memory usage.

The memory will slowly raises to full usage and finally drops .After some attempts the pod will go “Crashloopbackoff” status.

Memory value plotted in graph

vikki@drona-child-1:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-768979984b-mmgbj 1/1 Running 6 65d

stress-test-57bb689598-8h4zm 0/1 CrashLoopBackOff 3 4m

vikki@drona-child-1:~$ To verify the reason for container termination, we can run the describe pod. In our case it clearly say OOMKilled and was 26 times restarted.

vikki@drona-child-1:~$ kubectl describe pod stress-test-dbbcf4fd7-pqh99 |grep -A 5 State

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: OOMKilled

Exit Code: 137

Started: Sun, 19 Aug 2018 21:23:01 +0530

Finished: Sun, 19 Aug 2018 21:23:24 +0530

Ready: False

vikki@drona-child-1:~$ kubectl describe pod stress-test-dbbcf4fd7-pqh99 |grep -i restart

Restart Count: 26

Warning BackOff 2m (x480 over 2h) kubelet, drona-child-3 Back-off restarting failed container

vikki@drona-child-1:~$

Discussion and feedback